The Evolution of Microsoft Developer Tools: Insights from Scott Guthrie

Microsoft has been a cornerstone in the software development world for nearly five decades. From its early days focusing on developer tools to becoming a cloud and AI powerhouse, Microsoft’s journey offers fascinating insights into how developer tools have evolved and shaped the tech landscape. In a recent in-depth conversation with Scott Guthrie—Microsoft’s Executive Vice President for Cloud and AI and a veteran with 28 years at the company—we explore the milestones, challenges, and bold decisions that have defined Microsoft’s developer ecosystem.

The Early Days: Developer Tools at Microsoft’s Core

Microsoft started not as a software giant but as a developer tools company. Its first product was Microsoft BASIC for the Altair computer in 1975, a foundational tool that enabled programming on early personal computers. This focus on empowering developers continued, with products like Quick Basic and Microsoft C helping developers build applications on top of Windows.

Scott emphasizes that Microsoft’s success was always linked to enabling developers. “If you don’t have developers building applications, you don’t have a business,” he notes. This philosophy continues today with Azure and modern developer tools.

Democratizing Development: Visual Basic and Beyond

In the 1990s, Microsoft made development accessible to a broader audience with tools like Visual Basic and Microsoft Access. Visual Basic, in particular, revolutionized development by allowing users to drag and drop interface elements and write simple code, making programming approachable for non-experts, such as financial traders.

One key innovation was the “edit and continue” feature, enabling developers to modify code while the program was running without lengthy recompilation. This dramatically increased productivity and foreshadowed today’s rapid development cycles.

Scott draws parallels to today’s low-code/no-code movements and AI-assisted development, highlighting how technology continues to lower barriers for creators.

The Birth of .NET and Visual Studio

Scott joined Microsoft in 1997, during a pivotal time when the company was developing Visual Studio and the .NET framework. The goal was to unify various programming languages and tools under one platform, allowing developers to build robust applications more efficiently.

.NET introduced the Common Language Runtime (CLR), which supported multiple languages like Visual Basic, C++, and later C#. Scott and his colleague Mark Anders created ASP.NET during this era, pioneering web development on the Microsoft stack.

Visual Studio became an integrated development environment (IDE) that brought together coding, debugging, and profiling tools, raising developer productivity significantly.

Steve Ballmer’s Iconic “Developers, Developers, Developers” Moment

A memorable moment from this era was Steve Ballmer’s impassioned speech emphasizing the importance of developers to Microsoft’s success. Scott recalls that the core message was simple but powerful: winning the hearts and minds of developers is critical because developers build the innovative solutions that drive platform adoption.

This developer-centric mindset became deeply embedded in Microsoft’s culture and events like Microsoft Build continue to reflect this focus.

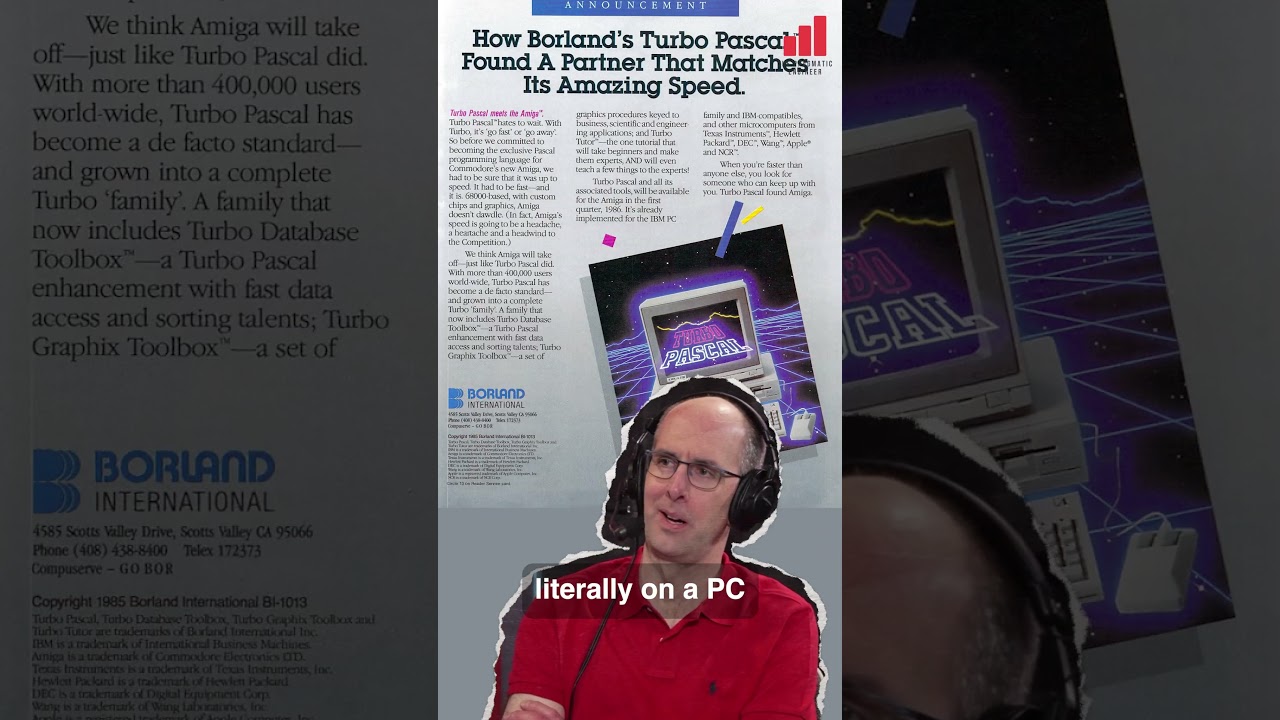

The Evolution of C# and Anders Hejlsberg’s Role

Anders Hejlsberg, the creator of Turbo Pascal, played a vital role in shaping C# and TypeScript at Microsoft. His expertise helped design C# as a language that balanced power and elegance, introducing features such as generics that distinguished it from competitors like Java.

Scott praises Anders’ long-term vision and consistency, which has helped maintain C#’s relevance and productivity over multiple iterations.

The Era of Expensive Tools and Documentation

In the 1990s and early 2000s, Microsoft’s developer tools and documentation were premium products. Developers often paid thousands of dollars annually for Visual Studio licenses and MSDN subscriptions, which included extensive documentation on CDs before widespread internet access.

Though costly, these investments paid off by dramatically boosting developer productivity. Scott recalls how MSDN was revolutionary for its time, providing a centralized, searchable knowledge base that was otherwise unavailable.

Cloud Computing and the Azure Journey

Microsoft Azure was introduced in 2008, initially struggling against competitors like Amazon Web Services. When Satya Nadella took over the Server and Tools division in 2011, Scott was asked to help turn Azure around.

They discovered usability issues and lack of support for open source and Linux. Through focused efforts, including supporting Linux, virtual machines, and hybrid cloud scenarios, Azure grew from a distant seventh place to become a top cloud provider.

Scott highlights the importance of choosing the right markets and building developer-friendly platforms to gain traction, a lesson that applies broadly to startups and enterprises alike.

Embracing Open Source: From CodePlex to GitHub

Microsoft’s relationship with open source evolved significantly over time. Early attempts like CodePlex were limited, but as the business model shifted towards cloud and services, Microsoft embraced true open source with permissive licenses and community involvement.

Opening up .NET and adopting open source projects like jQuery marked a cultural shift. This openness paved the way for Microsoft’s acquisition of GitHub in 2018, a move that was initially met with skepticism but ultimately strengthened Microsoft’s position in the developer community.

The 2014 Turning Point: Three Bold Developer-Centric Decisions

In 2014, Scott and his team made three transformative decisions to regain relevance with developers:

- Introduce the Community Edition of Visual Studio: A free, fully featured version for small projects and independent developers.

- Open Source .NET and Make it Cross-Platform: Hosting on GitHub and enabling contributions under permissive licenses.

- Develop Visual Studio Code (VS Code): A lightweight, open source, cross-platform code editor optimized for web developers.

These decisions, made within a short brainstorming session, laid the foundation for Microsoft’s renewed developer momentum. VS Code, initially the most speculative, became hugely successful and helped bridge Microsoft’s relationship with the open source community.

Looking Ahead: AI, Developer Agents, and Cloud Innovation

Scott is excited about the next generation of developer tools powered by AI. Rather than just responding to requests, AI agents will become collaborators that can autonomously handle complex tasks, from generating code to monitoring application health.

He compares AI copilots to giving developers “Iron Man suits,” dramatically enhancing productivity and creativity.

Azure’s global footprint and hybrid capabilities will further empower developers to build scalable, secure, and compliant applications worldwide.

Advice for Developers in the Age of AI

Scott encourages developers not to fear automation but to embrace it as a productivity enhancer. History shows that tools like debuggers, garbage collection, and open source were once controversial but ultimately empowered developers and created more opportunities.

The key to long-term success is focusing on problem-solving, creativity, and leveraging new technologies rather than clinging to specific syntax or manual tasks.

Conclusion

Microsoft’s journey from a BASIC interpreter startup to a leader in cloud, AI, and open source development underscores the importance of bold decisions, developer focus, and adaptability.

Scott Guthrie’s reflections highlight that at the heart of every technological evolution is a commitment to empowering developers—whether through tooling, platforms, or community engagement.

For developers navigating today’s fast-changing landscape, the message is clear: embrace emerging technologies, focus on delivering value, and remember that innovation often comes from collaboration between humans and machines.

For further exploration of Microsoft’s developer tools evolution, check out detailed resources and stay tuned for more insights as the company continues to innovate in the cloud and AI space.